Improving Robustness of Intent Detection Under Adversarial Attacks: A Geometric Constraint Perspective

Image credit: Original Paper

Image credit: Original Paper摘要

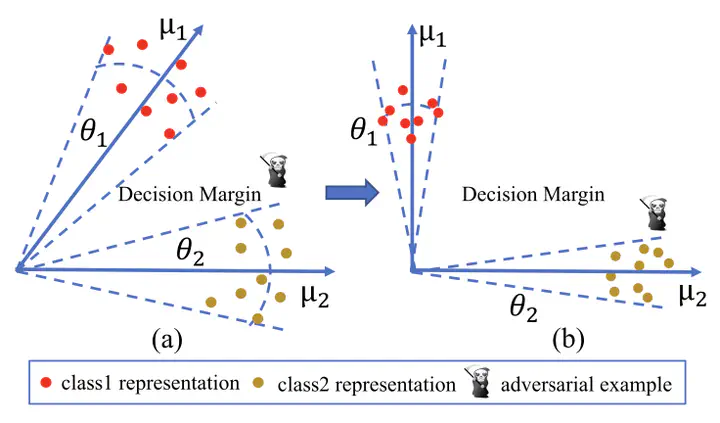

Deep neural networks (DNNs)-based natural lan- guage processing (NLP) systems are vulnerable to being fooled by adversarial examples presented in recent studies. Intent detection tasks in dialog systems are no exception, however, relatively few works have been attempted on the defense side. The combination of linear classifier and softmax is widely used in most defense methods for other NLP tasks. Unfortunately, it does not encour- age the model to learn well-separated feature representations. Thus, it is easy to induce adversarial examples. In this article, we propose a simple, yet efficient defense method from the geometric constraint perspective. Specifically, we first propose an M-similarity metric to shrink variances of intraclass features. Intuitively, better geometric conditions of feature space can bring lower misclassification probability (MP). Therefore, we derive the optimal geometric constraints of anchors within each category from the overall MP (OMP) with theoretical guarantees. Due to the nonconvex characteristic of the optimal geometric con- dition, it is hard to satisfy the traditional optimization process. To this end, we regard such geometric constraints as manifold optimization processes in the Stiefel manifold, thus naturally avoiding the above challenges. Experimental results demonstrate that our method can significantly improve robustness compared with baselines, while retaining the excellent performance on normal examples.